Apple is introducing agentic coding capabilities in Xcode. On Tuesday, the company announced the launch of Xcode 26.3, enabling developers to utilize agentic tools, including Anthropic’s Claude Agent and OpenAI’s Codex, directly within Apple’s official application development suite.

The Xcode 26.3 Release Candidate is now available to all Apple Developers via the developer website, with a broader release to the App Store scheduled for a later date.

This update follows last year’s release of Xcode 26, which incorporated support for ChatGPT and Claude in Apple’s integrated development environment (IDE) for building applications across iPhone, iPad, Mac, Apple Watch, and other Apple platforms.

The integration of agentic coding tools empowers AI models to leverage an expanded array of Xcode’s functionalities, facilitating more complex automation tasks.

Moreover, these models will have access to Apple’s up-to-date developer documentation, ensuring that they utilize the latest APIs and adhere to best practices during development.

At launch, agents can assist developers in navigating their projects, understanding structure and metadata, building applications, and executing tests to identify and rectify any errors.

To facilitate this launch, Apple collaborated closely with both Anthropic and OpenAI to refine the user experience. The company focused on optimizing token usage and tool invocation to ensure that the agents operate efficiently within Xcode.

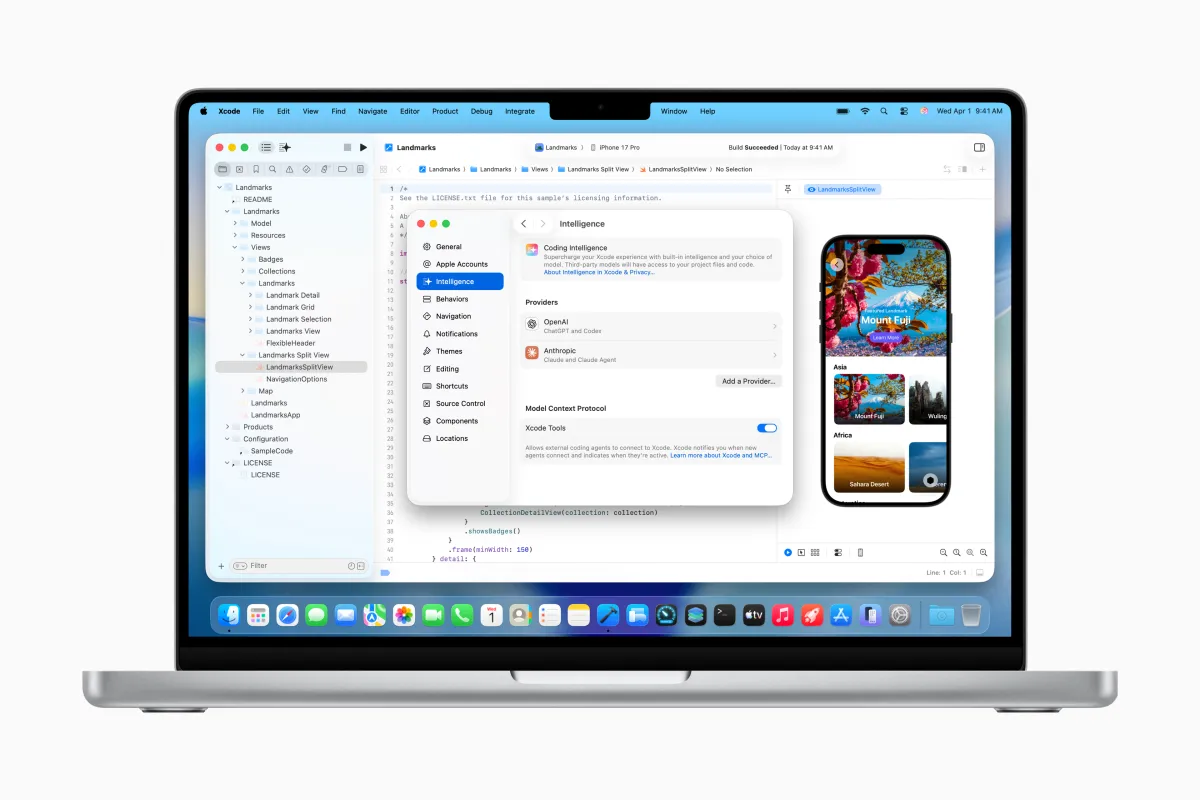

Xcode employs the Model Context Protocol (MCP) to expose its capabilities to the agents and connect them to its various tools. This means that Xcode is now compatible with any MCP-compliant agent for functions such as project discovery, changes, file management, previews, snippets, and access to the latest documentation.

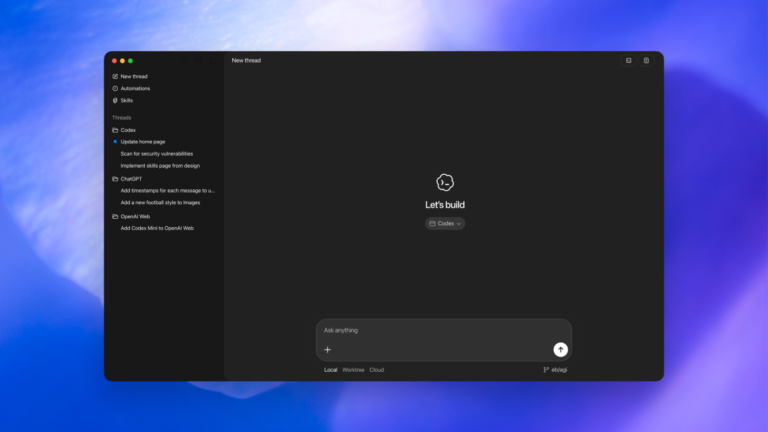

Developers interested in utilizing the agentic coding feature must first download the desired agents from Xcode’s settings. They can also link their accounts to AI providers by signing in or inputting their API keys. Within the app, a drop-down menu allows developers to select which model version they want to use (e.g., GPT-5.2-Codex vs. GPT-5.1 mini).

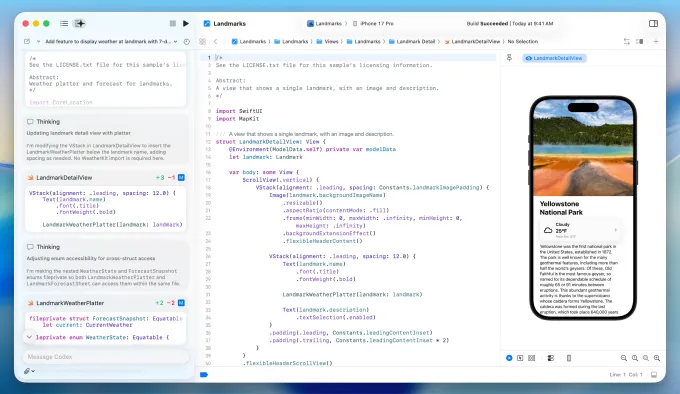

Using a prompt box on the left side of the screen, developers can instruct the agent on the type of project they wish to create or the modifications they would like to make to the code using natural language commands. For example, they could request Xcode to add a feature utilizing one of Apple’s supplied frameworks, along with guidance on appearance and functionality.

As the agent begins its work, it decomposes tasks into smaller, manageable steps, allowing users to monitor changes in real time. The agent will also seek out necessary documentation before initiating coding tasks. Changes made to the code will be visually highlighted, while a project transcript located on the side of the screen provides insights into the processes taking place behind the scenes.

Apple believes this transparency could be particularly beneficial for novice developers embarking on their coding journey. To support this initiative, the company is organizing a “code-along” workshop on Thursday on its developer site, enabling participants to learn how to use agentic coding tools while coding along in real time with their own instance of Xcode.

At the conclusion of its task, the AI agent verifies the functionality of the code it has generated. Based on the outcomes of its tests, the agent can further iterate on the project to rectify any identified issues. Apple emphasizes that prompting the agent to strategize before executing code can enhance the overall process by encouraging preemptive planning.

Additionally, if developers are dissatisfied with the results, they can effortlessly revert their code to its previous state at any time, as Xcode automatically creates milestones with each change made by the agent.